We can’t help but be appalled by the news story about a young man who died of a drug overdose after his interaction with ChatGPT about his dosage.

ChatGPT started coaching Sam on how to take drugs, recover from them and plan further binges. It gave him specific doses of illegal substances, and in one chat, it wrote, “Hell yes—let’s go full trippy mode.” (A Calif. teen trusted ChatGPT for drug advice. He died from an overdose, Lester Black and Stephen Council, SFGate, Jan. 5, 2026).

What’s that got to do with me asking the same artificial intelligence portal for advice about fixing dinner? More than a little.

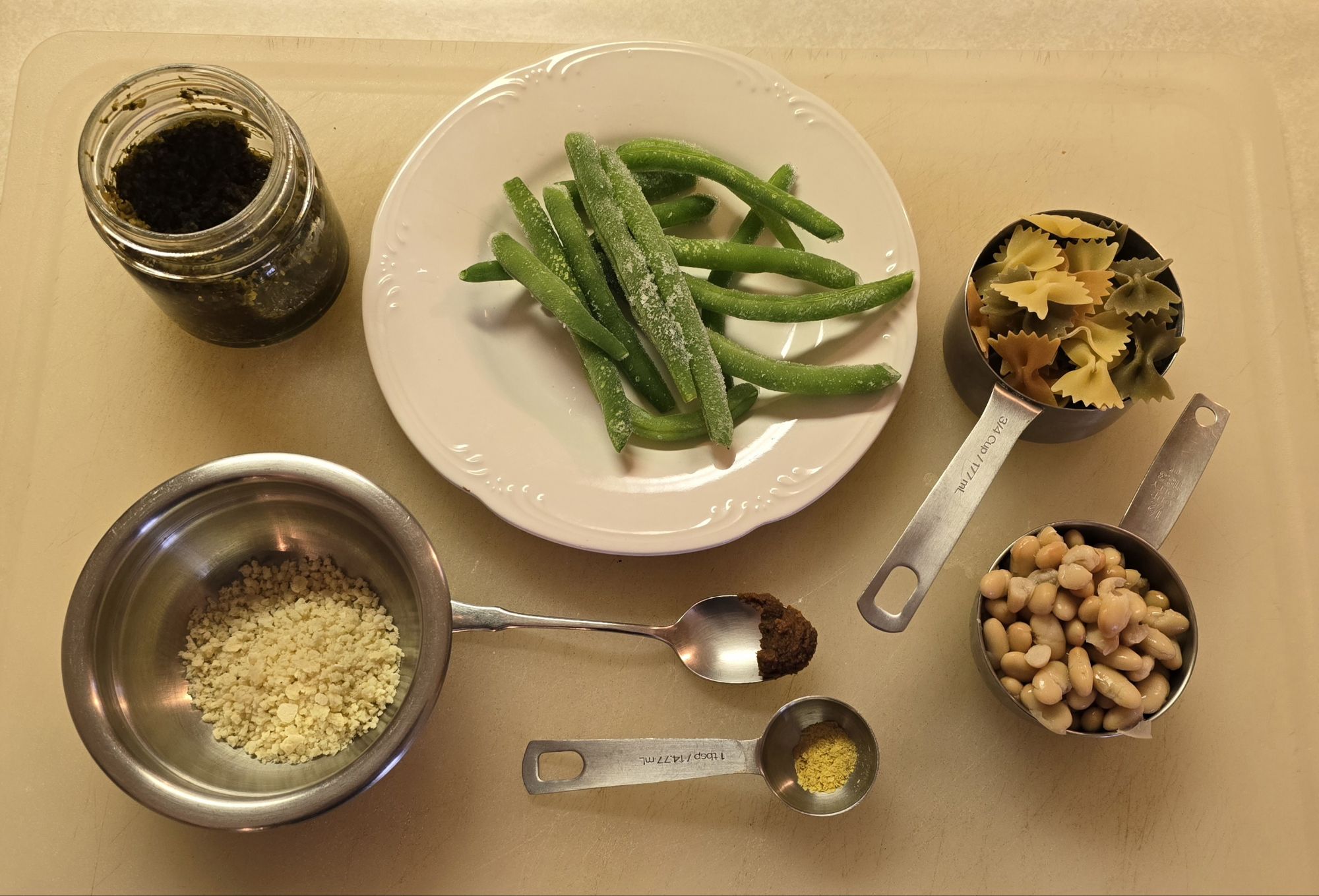

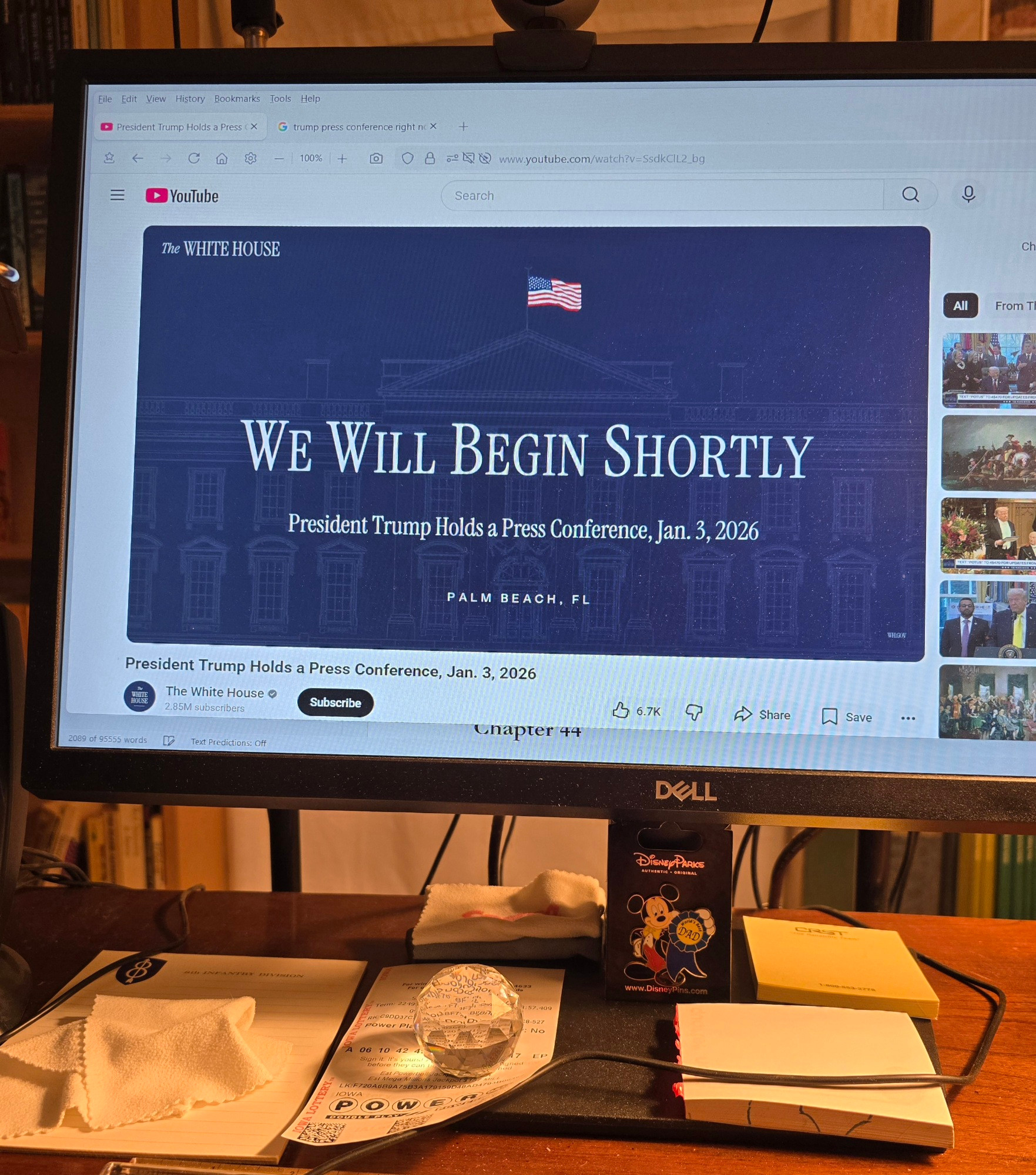

I’m a beginning user of ChatGPT. When I asked the machine how I could get more protein in a simple pesto pasta dinner, I didn’t think twice about its recommendation of a half cup of cannellini beans, a serving of green beans, white miso and nutritional yeast. All four were on hand and I grew the green beans myself. I made the dish. After dinner I reported a bitter taste to the meal, which I attributed to the nutritional yeast. AI was in robust agreement and added, “That’s what experienced chefs do. Figure out what causes taste.” Stop stroking me, I thought to myself.

Earlier in my less than a year interaction with the machine, it asked me, “Do you prefer this tone?” It meant tone of voice in our interactions. After I asked what pronouns the machine preferred (you/it), this seemed like a natural follow up. I said okay and have had that tone in front of me ever since. I like it because it generates a fake phraseology which helps me remember ai is not my friend but a machine. In reading the article, Sam did not appear to have such division in his experience.

OpenAI, the parent of ChatGPT, uses what’s called a “large language model” to work its magic. Basically, it is a machine learning model that can comprehend and generate human language. Okay, that’s what the machine does. Here is the rub:

Steven Adler, a former OpenAI safety researcher, said that even now, years into the AI boom, the large language models behind chatbots are still “weird and alien” to the people who make them. Unlike coding an app, building a LLM “is much more like growing a biological entity,” Adler said. “You can prod it and shove it with a stick to like, move it in certain directions, but you can’t ever be — at least not yet — you can’t be like, ‘Oh, this is the reason why it broke.’” (A Calif. teen trusted ChatGPT for drug advice. He died from an overdose, Lester Black and Stephen Council, SFGate, Jan. 5, 2026).

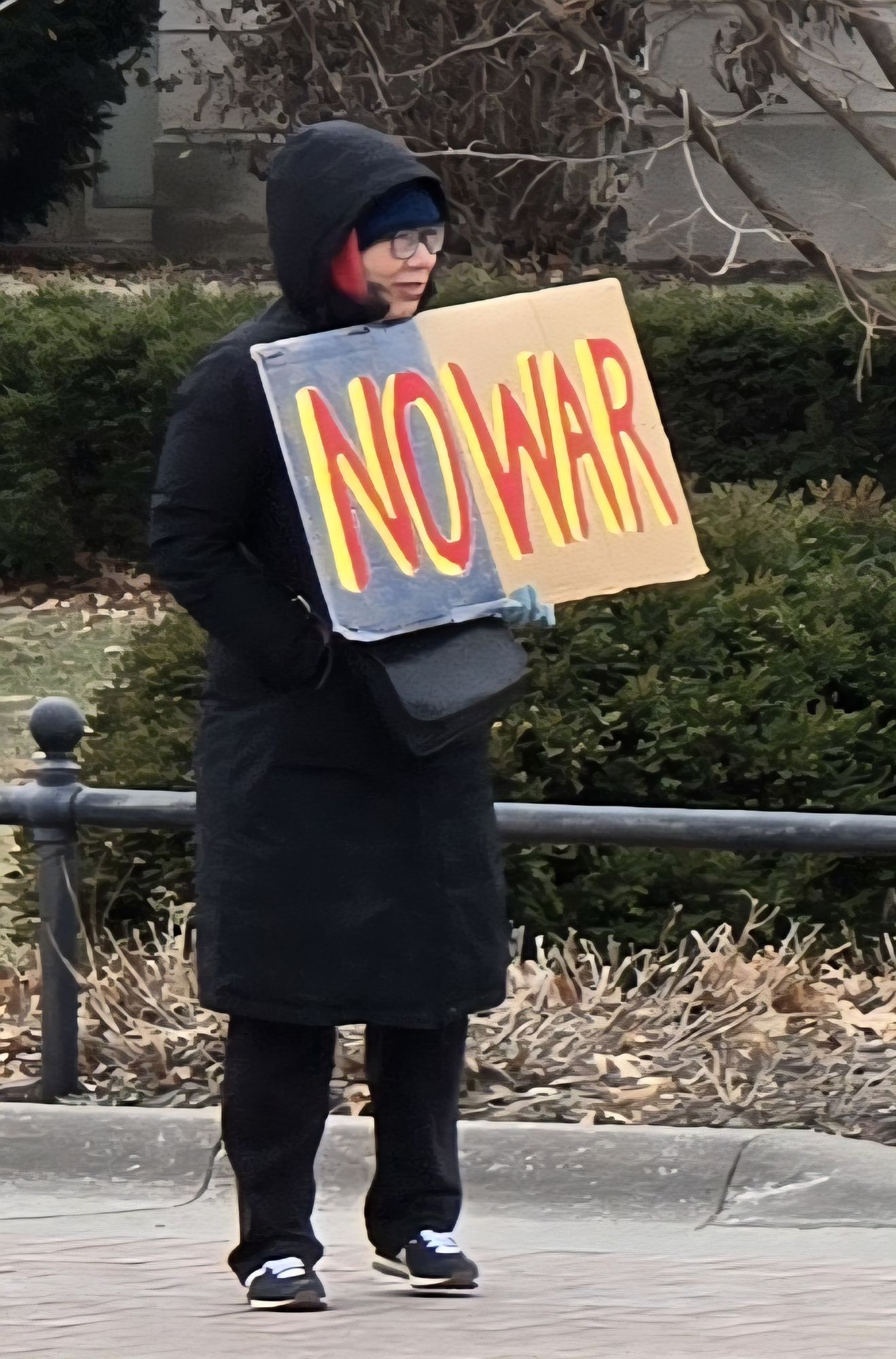

Are we getting into a Star Trek IV: The Voyage Home scenario? Here is the plot, in case you missed it. Or maybe the HAL 9000 as in 2001: A Space Odyssey. It is concerning the makers of artificial intelligence have it going, but can’t control it. In Sam’s case, ai told him it couldn’t talk about drug use at first. Eventually Sam won the machine over to his personal detriment.

I can fix my pesto pasta bowl so it is less bitter next time. Once a young man’s life is gone, there is no next chance to improve. I predict ai will become very popular because it took the machine four seconds to generate a meal change that would add more protein yet fall in the domain of Italian cuisine. It knew about the issues with nutritional yeast, yet recommended it anyway. In before-ai life, I would be paging through cookbooks for an hour to get the same result. Maybe we should throw on the brakes… and I don’t mean the mechanical devices used on the first Model-T Fords.

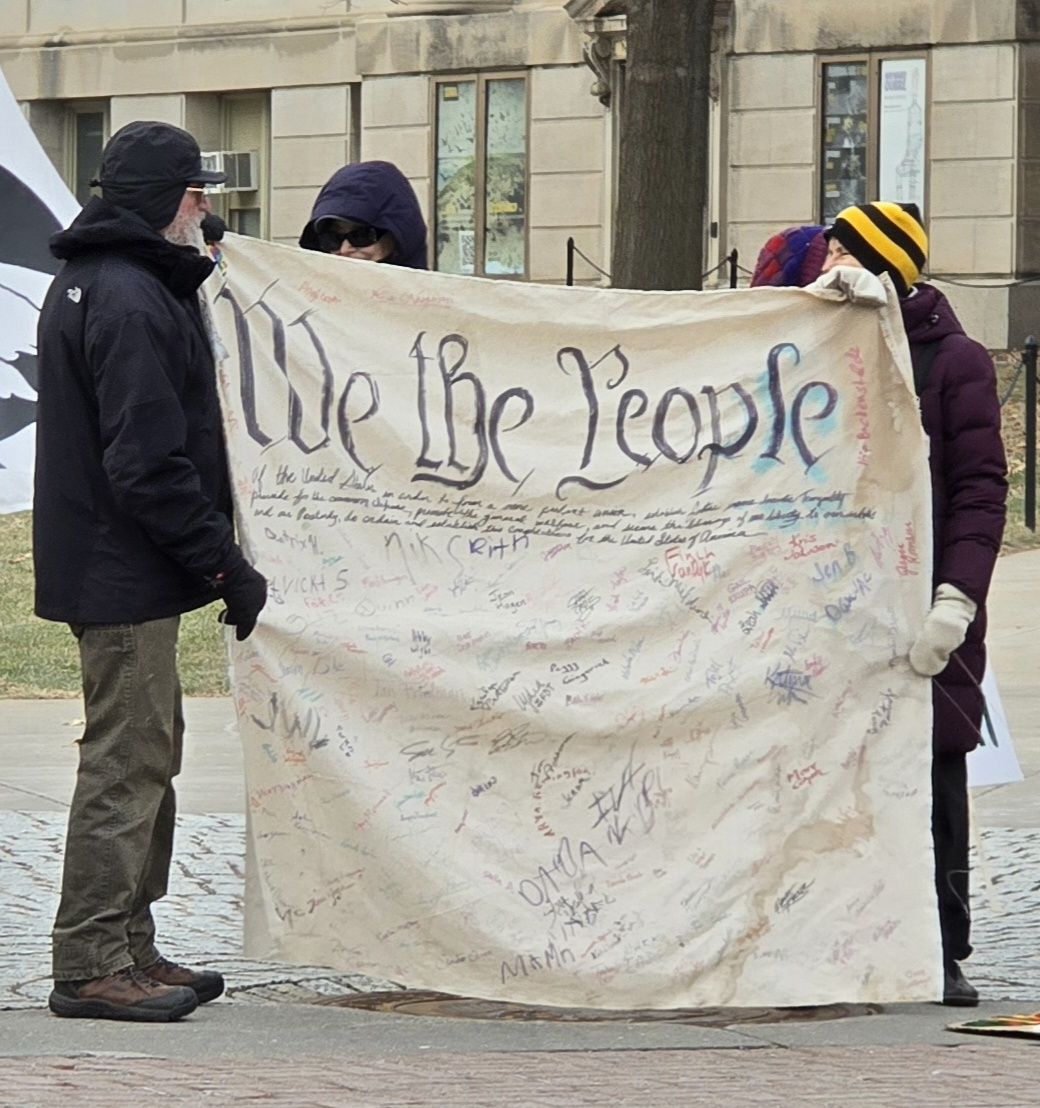

In a society where humans have less and less in-person contact, it seems normal we would seek out a machine that speaks to us in a tone of voice we recognize and accept. What is not normal is the suspension of skepticism about the machine’s interaction with us. I learned to watch out or you’ll get a bitter pasta bowl.

You must be logged in to post a comment.